AI-optimized GPUs for Extreme Performance

Train faster. Inference smarter.

Build the future with next-gen hardware.

AI Hardware Powered by GPUs

AI hardware - especially GPUs (Graphics Processing Units) - is the engine behind machine learning, deep learning, and data-intensive AI workloads.

Unlike standard CPUs, GPUs can perform thousands of computations simultaneously, making them essential for

- Neural network training

- Real-time inference

- Computer vision

- Natural language processing

Scientific simulations

Welcome to your one-stop destination for cutting-edge AI hardware - where innovation meets performance.

| Why GPU's for AI? | |

|---|---|

| Feature | Benefit |

| Massive Parallelism | Handle complex models with millions of parameters |

| High Memory Bandwidth | Accelerate large datasets without bottlenecks |

| Tensor Cores | Builth-in deep learning performance optimization |

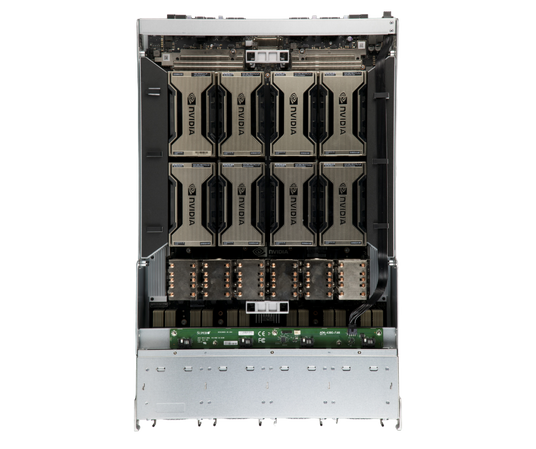

| Scalability | Stackable into multi-GPU systems for enterprise AI |

| Energy Efficiency | More performance per watt than CPU's |

GPU's in Stock

View allNeed help choosing the right GPU?

Whether you’re a solo researcher or managing an enterprise AI deployment, our experts are here to help you find the best fit for your workflow.

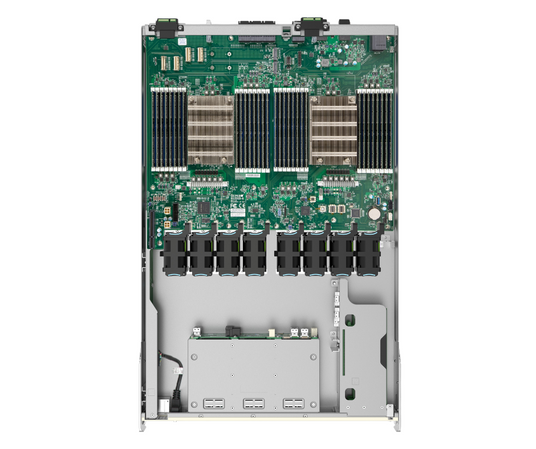

High-performance GPU's designed for data centers, AI, and high-end visualization workloads

What is AI Hardware?

AI hardware refers to specialized computing components - primarily Graphics Processing Units (GPUs) - that are engineered to handle the massive computational demands of modern AI and machine learning workflows.

While CPUs are excellent for general-purpose computing, they simply can't keep up with the demands of modern machine learning.

That's where GPUs (Graphics Processing Units) come in - delivering the parallel processing power required to train and run complex AI models.

Why Choose AI-optimized GPUs?

Modern GPUs are built with AI workloads in mind.

They go beyond gaming and graphical rendering by offering unique features that accelerate performance, reduce training time, and enhance scalability

Train Faster, Deploy Smarter

Reduce model training time from days to hours using GPU acceleration.

Scale with Confidence

Move from prototypes to production without bottlenecks—our GPUs are ready for enterprise-level scaling.

Reduce Operational Costs

Maximize performance per watt with energy-efficient designs built for 24/7 workloads.

Stay Ahead of the Curve

Our latest GPUs are built for LLMs, reinforcement learning, diffusion models, and real-time inference at the edge.

AI Workloads That Demand GPUs

Neural Network Training

Training models like GPT, Stable Diffusion, YOLO, or ViT requires thousands of matrix operations per second. GPUs make this training faster and more cost-effective.

Inference at the Edge

Once a model is trained, running it in real time (e.g. recognizing faces, translating speech, recommending content) still requires speed. GPUs enable low-latency inference, especially in edge AI and embedded systems.

Reinforcement Learning

Used in robotics and game AI, reinforcement learning requires high-speed simulation environments. GPUs can simulate and update policies quickly, accelerating results.

Generative AI

From AI art to synthetic voices, generative models like GANs and diffusion models (e.g. Midjourney, DALL·E) require enormous GPU compute power to produce outputs in seconds.

Reduce Operational Costs

Maximize performance per watt with energy-efficient designs built for 24/7 workloads.

Stay Ahead of the Curve

Our latest GPUs are built for LLMs, reinforcement learning, diffusion models, and real-time inference at the edge.